How Customers Can Reduce AI Implementation Risks

Artificial Intelligence (AI) has evolved from a cutting-edge innovation to a foundational element of digital transformation. From automating operations to unlocking deeper customer insights, its potential is undeniable. Yet, with that potential comes an equally significant set of risks, ranging from data privacy concerns to model bias and regulatory non-compliance.

For organizations aiming to scale responsibly, reducing these risks isn’t optional; it’s strategic. Poorly managed AI can result in reputational damage, legal issues, and wasted investment. To fully realize the value of AI, businesses must embed risk management practices right from the planning and implementation stages.

This blog explores what AI risk management entails, why it matters, how to identify risks early, and the proactive steps organizations can take to mitigate them, ensuring that innovation doesn’t come at the cost of control.

Overview

AI risk management is essential to detect, assess, and respond to potential bias, misuse, and non-compliance.

Risks should be identified across data, models, processes, and vendors, not in isolation.

Proactive strategies include ongoing monitoring, transparency, role-based governance, and clear risk ownership.

A strong vendor risk management process prevents blind spots from third-party tools and datasets.

Auditive’s Trust Center unifies all risk elements, governance, compliance, and vendor controls under a single source of truth.

What is AI Risk Management?

AI risk management is the structured process of identifying, analyzing, mitigating, and continuously monitoring risks that arise during the development, deployment, and operation of artificial intelligence systems. These risks may include model bias, data privacy violations, lack of transparency, security vulnerabilities, regulatory non-compliance, and unintended consequences from automation.

Unlike traditional technologies, AI systems evolve over time through data inputs and model training, which makes their behavior harder to predict and control. As a result, the risks associated with AI are often dynamic and complex, demanding a proactive, multi-disciplinary approach to governance.

Effective AI risk management ensures that AI initiatives are not only innovative but also ethical, secure, and aligned with business and regulatory expectations. It helps businesses protect stakeholder trust, ensure model reliability, and reduce financial and legal exposure from system failures or misuse.

Why Is AI Risk Management Important?

Artificial Intelligence is reshaping how organizations operate, driving decisions, automating workflows, and accelerating customer experiences. But as its influence expands across business functions, the risks tied to AI systems grow in complexity. Managing those risks is not just about damage control; it’s central to ensuring reliable outcomes, stakeholder confidence, and long-term scalability.

Here are four critical reasons why AI risk management must be embedded into every implementation strategy:

1. Preventing security vulnerabilities

AI platforms are frequent targets for data breaches and adversarial attacks. These threats can compromise customer data, intellectual property, and operational integrity. Without appropriate guardrails, the consequences can extend beyond financial loss to long-term reputational damage.

Effective AI risk management includes pre-implementation assessments, continuous monitoring, and structured response protocols, helping reduce exposure before vulnerabilities become liabilities.

2. Avoiding ethical failures

Algorithmic bias, whether stemming from flawed training data or poor governance, can lead to discriminatory outcomes. In regulated industries like finance, healthcare, and employment, this isn’t just a performance flaw; it’s a compliance and ethical issue.

Risk management frameworks ensure that AI models are audited for fairness, transparency, and accountability, reducing the likelihood of biased decision-making and reinforcing equitable outcomes.

3. Building stakeholder trust

Trust is a prerequisite for AI adoption. Employees, partners, and customers need confidence in the systems that inform high-stakes decisions. When AI functions as a black box, skepticism grows.

Structured AI governance builds trust through explainability and traceability. Auditive helps enterprises address this by integrating AI oversight into existing Trust Center workflows, enabling continuous monitoring of risk signals and actionable insights across the lifecycle.

4. Maintaining regulatory compliance

As regulatory environments evolve to keep pace with AI’s scale, organizations face increasing scrutiny. Laws such as the EU AI Act or U.S. data protection frameworks require clear documentation, transparency, and control.

An AI risk management strategy ensures that compliance is not reactive but built-in, minimizing the risk of penalties and improving audit readiness. Auditive supports this with automated compliance reporting and Vendor Risk Management capabilities, helping organizations stay ahead of regulatory demands while scaling responsibly.

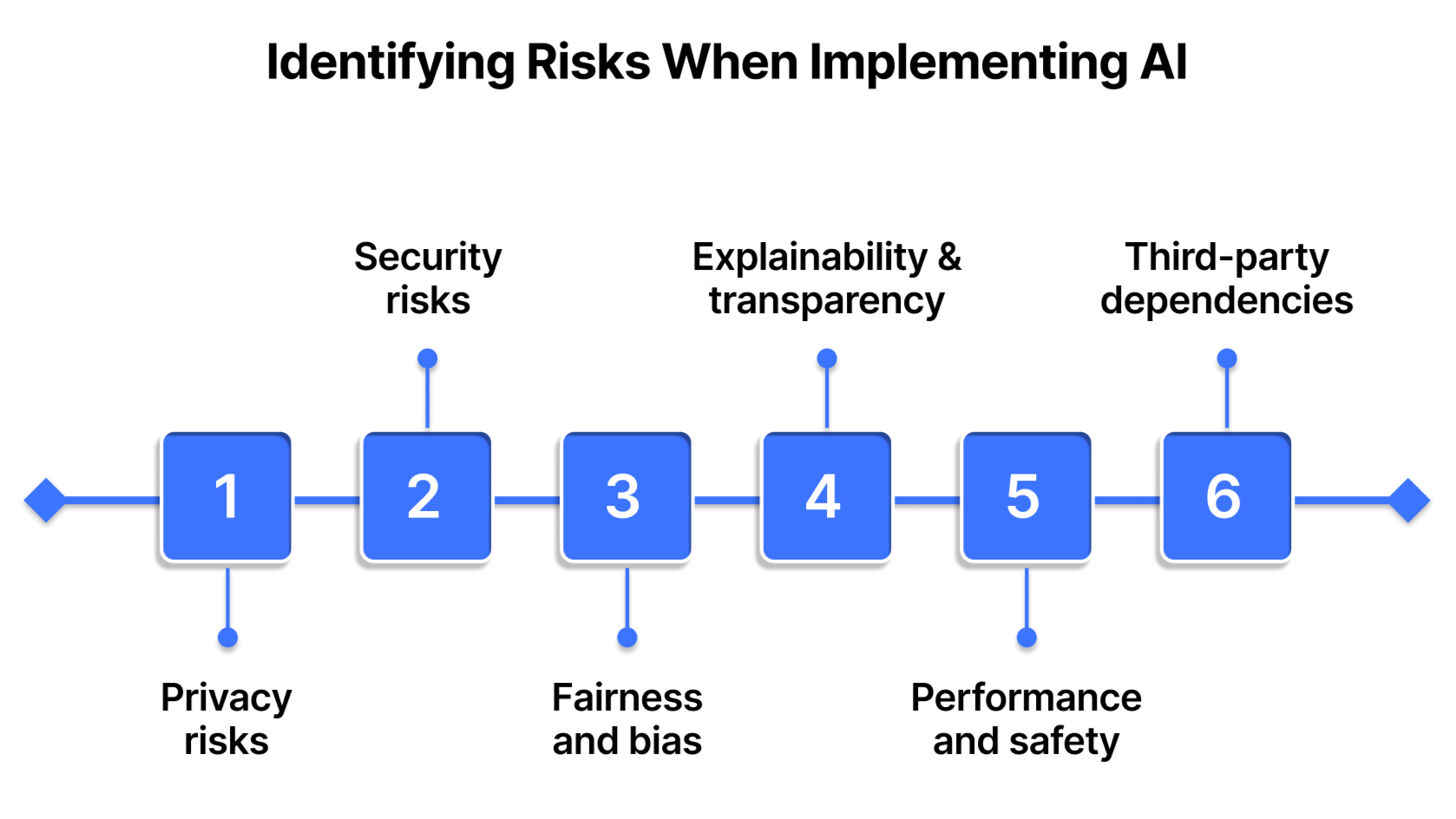

Identifying Risks When Implementing AI

Before deploying any AI system, it’s essential to map potential risks across both technical and operational domains. AI doesn’t operate in isolation, it relies on vast datasets, complex models, third-party providers, and human oversight. Each layer introduces unique risk exposures that, if not addressed early, can lead to operational failures, legal liabilities, or reputational harm.

A structured risk identification process helps organizations surface, categorize, and evaluate these exposures before they evolve into costly incidents. Below are six critical categories of AI risk to assess across your implementation pipeline:

1. Privacy risks

AI models depend on vast volumes of data, often sensitive or personal in nature. Improper handling, weak data governance, or vague consent practices can trigger regulatory scrutiny and violate consumer trust. Even compliant data usage can raise ethical concerns if perceived as invasive or nontransparent.

2. Security risks

AI systems introduce new security threats such as data poisoning, model inversion, and unauthorized model extraction. These risks can compromise not only the AI’s performance but also the underlying data integrity and intellectual property. Traditional security protocols often fall short of addressing these novel vulnerabilities.

3. Fairness and bias

Bias, whether from flawed data, historical inequities, or unchecked assumptions, can propagate through AI models and influence outcomes at scale. Unfair outputs tied to gender, race, or other protected attributes may violate anti-discrimination laws and damage stakeholder confidence.

4. Explainability and transparency

Opaque models limit accountability. If users or regulators can’t understand how a model arrives at a decision, especially in sectors like finance, healthcare, or insurance, the organization may face compliance issues and lose credibility in high-stakes scenarios.

5. Performance and safety

AI models must not only work in controlled test environments but also perform reliably in production. Errors in real-world contexts, whether caused by outdated data, system drift, or lack of retraining, can lead to safety concerns or breach performance guarantees set in contracts or service-level agreements.

6. Third-party dependencies

Many AI systems depend on third-party data providers, cloud environments, or pre-trained models. Without visibility into their development, security, and compliance practices, organizations take on additional risk. Vendor due diligence is critical, particularly in regulated industries.

These risks don’t operate in silos, they often overlap. For example, a model-extraction attack could simultaneously expose security and privacy weaknesses. Additionally, risks can emerge from multiple business contexts: data sourcing, model training, deployment infrastructure, legal contracts, and even company culture.

An effective way to uncover hidden vulnerabilities is to review historical incidents, analyze current use cases, and conduct scenario-based testing. “Red teaming” exercises, where internal experts try to break the model or identify edge cases, can expose blind spots missed during development.

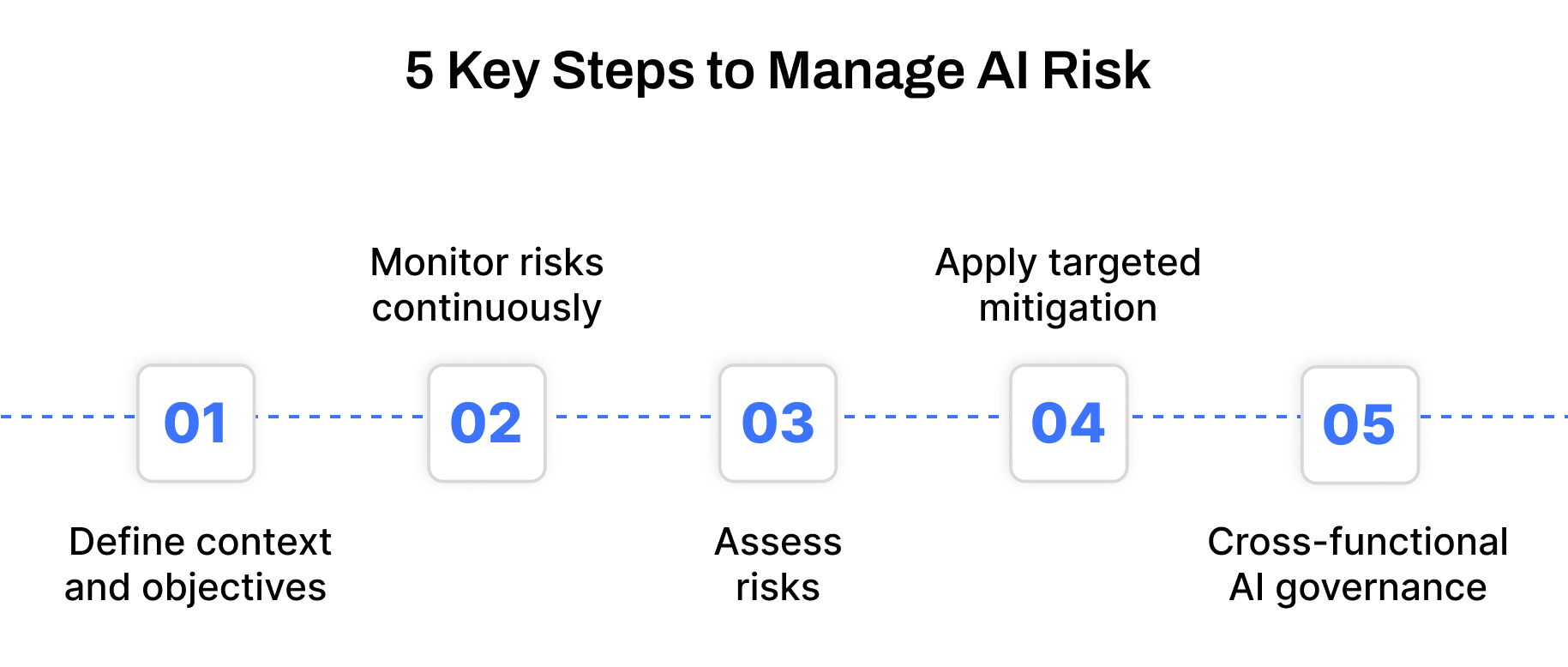

5 Key Steps to Manage AI Risk

Effectively managing AI risk requires a structured and proactive framework, one that goes beyond technical compliance and ensures your AI systems remain ethical, secure, and aligned with business goals. Below is a five-step approach to managing AI risk comprehensively:

Step 1. Define context and objectives

Begin by establishing the business environment, purpose, and boundaries for your AI initiative. Ask:

What specific problem is the AI solution addressing?

What are the measurable goals and expected outcomes?

In what regulatory, social, or financial context will the system operate?

Who will be impacted: users, partners, regulators?

Defining clear objectives ensures that the AI system remains aligned with enterprise strategy and minimizes unintended consequences from the outset.

Step 2. Identify and monitor risks continuously

Once risks are identified, whether ethical, operational, legal, or data-driven, they must be monitored in real-time. Regular audits of datasets, algorithms, and output patterns help detect anomalies or potential bias early. Compare system outcomes against expected behavior to ensure fairness, accuracy, and accountability.

Where Auditive fits: Continuous monitoring is critical, especially for regulated industries. With Auditive’s real-time alerting and Trust Center, you gain full visibility into how AI-driven decisions are made, helping detect bias, flag anomalies, and maintain compliance across systems.

Step 3. Assess risks based on impact and likelihood

Evaluate each identified risk by estimating how likely it is to occur and how severe the consequences would be. Use structured tools like risk matrices or decision trees to help prioritize where mitigation efforts are most urgently needed. This step is essential for directing resources efficiently and avoiding reactive decision-making.

Step 4. Apply targeted mitigation strategies

Once high-impact risks are prioritized, implement mitigation measures across three key layers:

Preventive controls: Encrypt data, enforce strict access policies, and anonymize sensitive inputs to limit vulnerabilities.

Detective controls: Use ongoing threat detection, logging, and behavioral analytics to catch issues early, before they escalate.

Corrective protocols: Establish clear response plans for events like data breaches or model failure, and ensure continuous learning from post-incident reviews.

Auditive helps operationalize many of these steps by offering configurable controls and Vendor Risk Management workflows. Whether you’re integrating third-party models or building AI in-house, you can establish secure pipelines and maintain full traceability.

Step 5. Form a cross-functional AI governance committee

Build an internal AI committee composed of key roles, data science, legal, compliance, product, security, to collectively oversee projects. This team is responsible for approving use cases, addressing risk escalations, and aligning models with policy. It acts as a safeguard to ensure all AI deployments meet organizational standards, especially in high-stakes or customer-facing environments.

How Auditive Helps You Proactively Manage AI Risk

Effective AI risk management isn’t just about implementing policies; it’s about having the right tools and frameworks that evolve with your AI systems. Auditive empowers organizations with a unified Trust Center and robust Vendor Risk Management capabilities that directly support responsible and secure AI adoption.

By continuously monitoring third-party risks, regulatory exposures, and operational vulnerabilities, Auditive gives risk teams a dynamic view of where AI implementations might pose compliance or trust challenges. You can centralize documentation, audit trails, and real-time risk alerts across your vendor ecosystem, ensuring AI models are deployed with the transparency and control they demand.

With Auditive, you can:

Track and assess vendor compliance with AI-related controls.

Get notified of anomalies, bias, or security issues via integrated risk alerts.

Use the Trust Center to align internal stakeholders and external vendors on risk posture and governance.

Establish a clear chain of accountability for AI risk mitigation, from onboarding to operations.

For organizations navigating AI at scale, Auditive becomes the connective layer between governance goals and execution. Whether you're deploying AI in finance, healthcare, or critical infrastructure, Auditive gives your teams the visibility and confidence to innovate securely.

Conclusion

AI success depends not just on innovation but on accountability. Ignoring risks like bias, data misuse, or regulatory gaps can derail progress quickly. A forward-looking AI strategy requires visibility, alignment, and safeguards across internal teams and external vendors.

Auditive provides the structure needed for intelligent, resilient AI. With centralized vendor risk management, it enables organizations to assess third-party models and tools before they become liabilities. The integrated Trust Center enhances oversight by aligning compliance, ethical guidelines, and operational integrity, all in one place.

Strengthen your AI with proactive governance.

Schedule a demo with Auditive to see how your organization can accelerate trustworthy AI adoption.

FAQs

Q1. What types of risks arise during AI implementation?

A1. Data bias, algorithmic discrimination, regulatory non-compliance, poor model explainability, and cybersecurity threats are key risks organizations must address.

Q2. How do I assess vendor-related AI risks?

A2. Use structured vendor risk management frameworks to evaluate vendors' data practices, compliance posture, model transparency, and contractual safeguards.

Q3. What makes an effective AI risk management framework?

A3. It includes continuous model validation, multi-level accountability, documentation of decisions, and monitoring tools to detect drift or anomalies.

Q4. Why is a Trust Center valuable in AI governance?

A4. A Trust Center centralizes documentation, risk metrics, model audit trails, and compliance status, enabling faster decisions and cross-team collaboration.

Q5. Can AI risk management improve stakeholder confidence?

A5. Yes. Transparent, well-governed AI increases trust across leadership, regulators, and customers, turning risk mitigation into a competitive advantage.